[Tutorial] Building a k3s Cluster from Scratch

Tip: This tutorial is written based on Linux + fish shell. Some command syntax may differ slightly from Bash. Please adjust commands according to your system environment.

Introduction

As we all know, k3s is a lightweight Kubernetes distribution developed by Rancher Labs, and is currently the most popular K8s lightweight solution.

Compared to traditional operations methods (1Panel/Baota/SSH, etc.), k3s has a steeper learning curve and requires understanding more container orchestration concepts. However, once mastered, you will gain:

Core Advantages of k3s

- Lightweight and Efficient - Single binary file, memory usage < 512MB, perfect for low-spec VPS

- Production Ready - Fully compatible with Kubernetes API, smooth migration to standard K8s

- Declarative Operations - Describe desired state with YAML, system automatically maintains it

- High Availability Guarantee - Automatic fault recovery + multi-node load balancing

- Out of the Box - Built-in networking, storage, Ingress and other core components

Through k3s, we can consolidate multiple cheap VPS into an enterprise-grade highly available cluster, achieving automation levels difficult to reach with traditional operations.

Target Audience and Preparation

Suitable For

- Developers with some Linux foundation

- Those wanting to transition from traditional operations to container orchestration

- Tech enthusiasts wanting to build personal high-availability services

Prerequisites

- Familiar with Linux command-line operations

- Understanding of Docker container basics

- Basic networking knowledge (SSH, firewall)

Learning Outcomes

After completing this tutorial, you will master:

- Using k3sup to quickly deploy k3s clusters

- Understanding the role of k3s core components (API Server, etcd, kubelet, etc.)

- Replacing default components to optimize performance (Cilium CNI, Nginx Ingress, etc.)

- Deploying your first application and configuring external access

- Basic cluster operations and troubleshooting techniques

Deployment Planning

k3s comes with a set of streamlined components by default. To meet production-level requirements, we need to plan in advance which modules to keep or replace. The following table shows the recommended trade-off strategy:

| Component Type | k3s Default | Replacement | Reason | k3sup Disable Parameter |

|---|---|---|---|---|

| Container Runtime | containerd | - | Keep default | - |

| Data Storage | SQLite / etcd | - | SQLite for single node, etcd for cluster | - |

| Ingress Controller | Traefik | Nginx Ingress / Others | Team familiarity, different feature requirements | --disable traefik |

| LoadBalancer | Service LB (Klipper-lb) | Don't deploy | Lazy to manage load balancing, just use cloudflare | --disable servicelb |

| DNS | CoreDNS | - | Keep default | - |

| Storage Class | Local-path-provisioner | Longhorn | Distributed storage, high availability, backup capability | --disable local-storage |

| CNI | Flannel | Cilium | eBPF performance, network policies, observability | --flannel-backend=none --disable-network-policy |

Environment Preparation

Required Tools

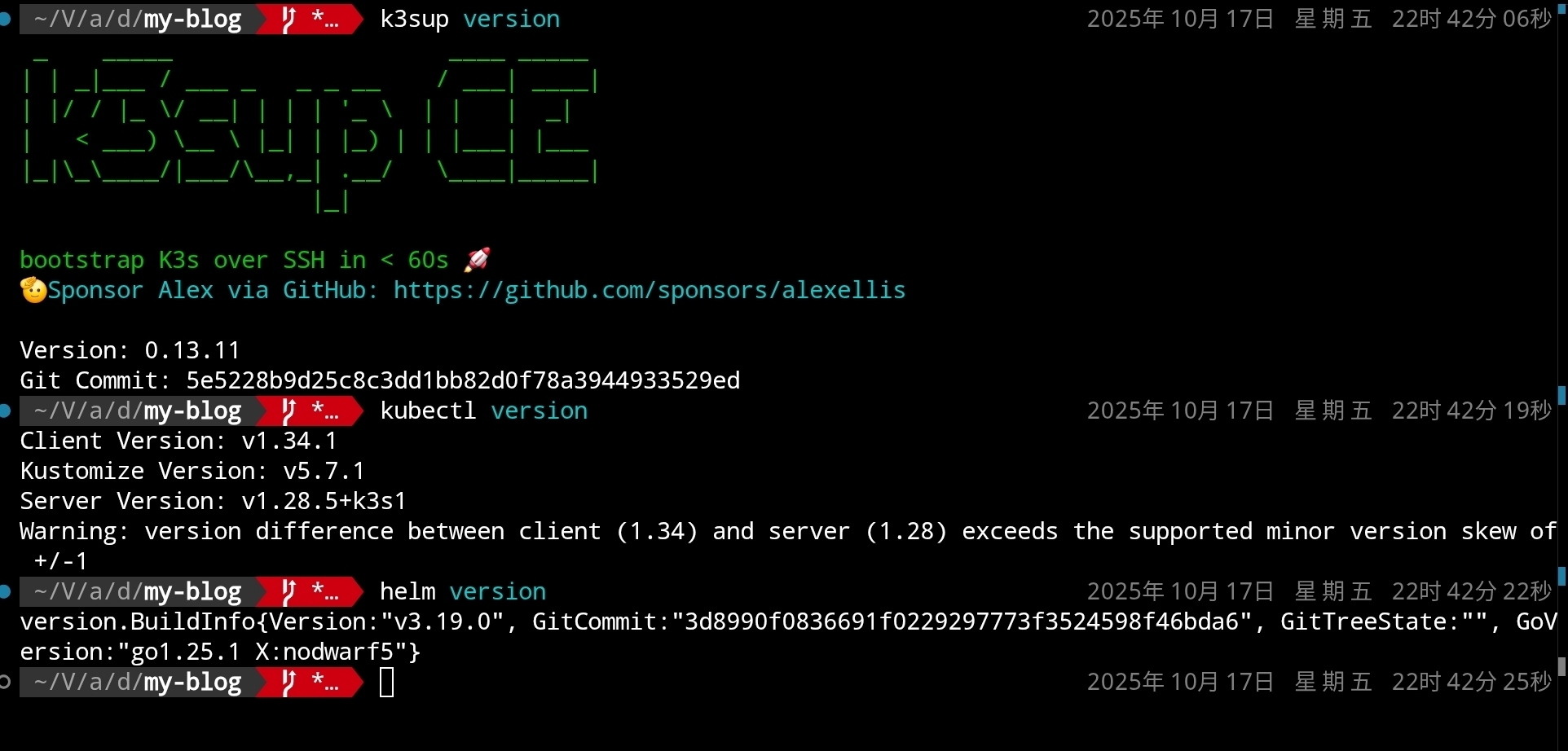

First, ensure that k3sup, kubectl, Helm and other tools are correctly installed (refer to the installation instructions provided by their respective GitHub projects):

k3sup version

kubectl version

helm version

As shown in the figure:

Server Requirements

Prepare at least three cloud servers (example environment uses Ubuntu 24.04) as a minimal three-node high-availability control plane (recommended configuration ≥ 4C4G). Record each node's IP in advance, confirm SSH public keys are distributed, and know the local private key path, which will be used in subsequent steps.

Deploy Initial Control Plane

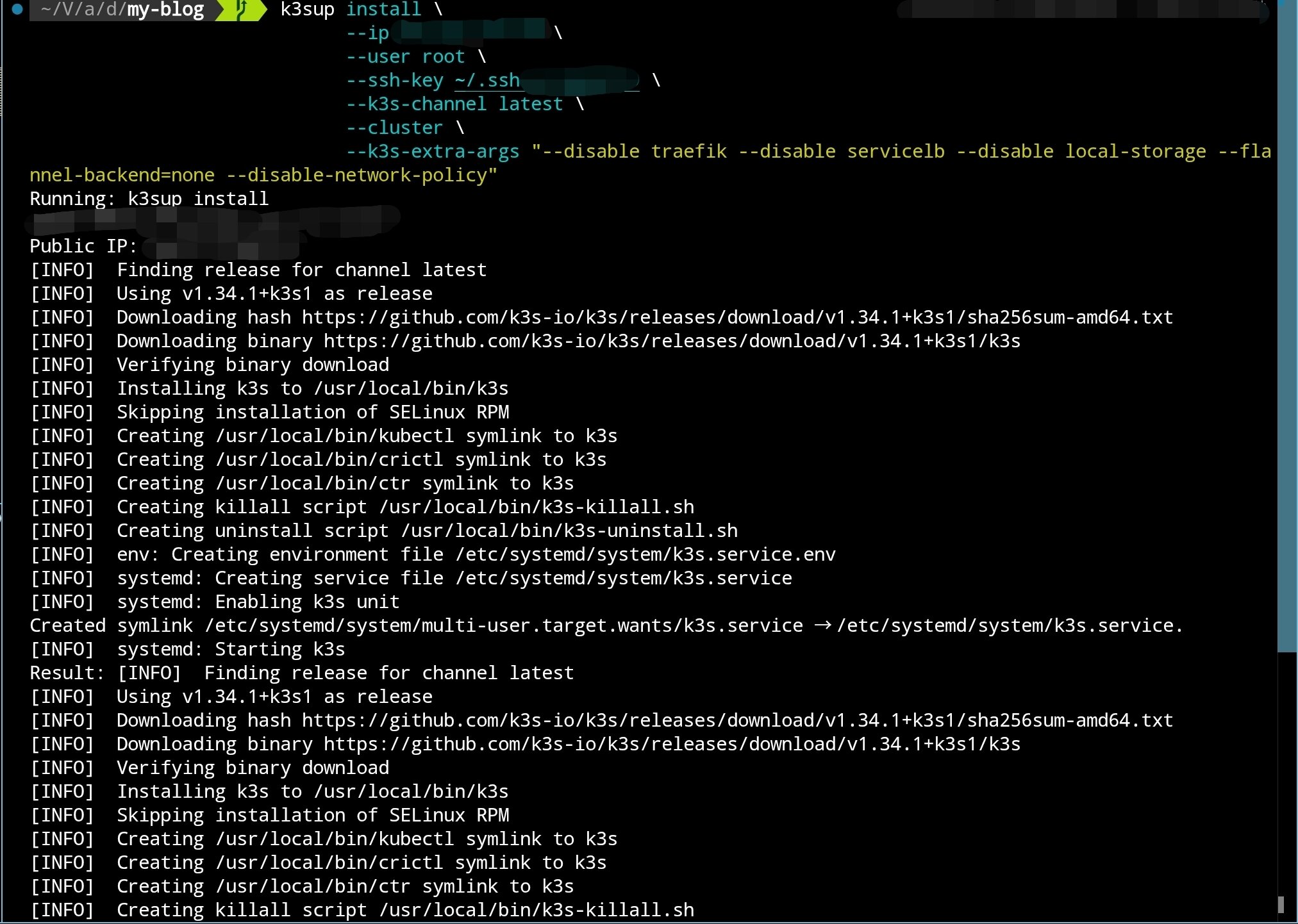

Use k3sup to deploy the initial control node:

k3sup install \

--ip <INITIAL_NODE_IP> \

--user root \

--ssh-key <KEY_PATH> \

--k3s-channel latest \

--cluster \

--k3s-extra-args "--disable traefik --disable servicelb --disable local-storage --flannel-backend=none --disable-network-policy"

As shown in the figure:

After installation, k3sup will automatically copy kubeconfig to the current directory. While you can use this configuration directly, a more robust approach is to merge it with existing configuration files.

Merge kubeconfig

- Backup your current kubeconfig (located at

~/.kube/configby default):

cp ~/.kube/config ~/.kube/config.backup

fish shell (for reference only):

cp $KUBECONFIG {$KUBECONFIG}.backup

- Merge old and new kubeconfig into a single flat file:

KUBECONFIG=~/.kube/config:./kubeconfig kubectl config view --flatten > ~/.kube/config.new

fish shell (for reference only):

KUBECONFIG=$KUBECONFIG:./kubeconfig kubectl config view --flatten > kubeconfig-merged.yaml

- After verifying the new file content is correct, overwrite the old configuration:

mv ~/.kube/config.new ~/.kube/config

fish shell:

mv ./kubeconfig-merged.yaml $KUBECONFIG

- Verify that the new context is active:

kubectl config get-contexts

kubectl config use-context default

Install Cilium (Replace Flannel)

When installing k3s, we disabled the default CNI (Flannel), so nodes cannot communicate with each other temporarily. Deploy Cilium as planned to provide networking and network policy capabilities.

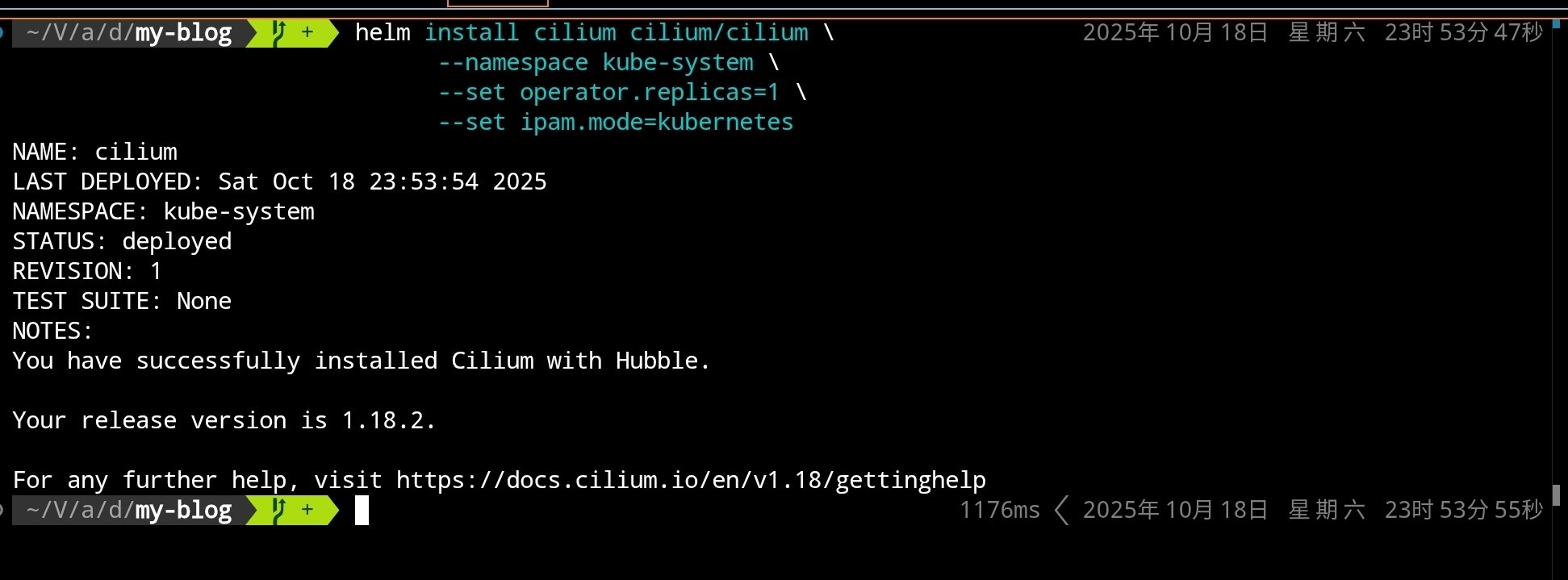

Install Cilium using Helm:

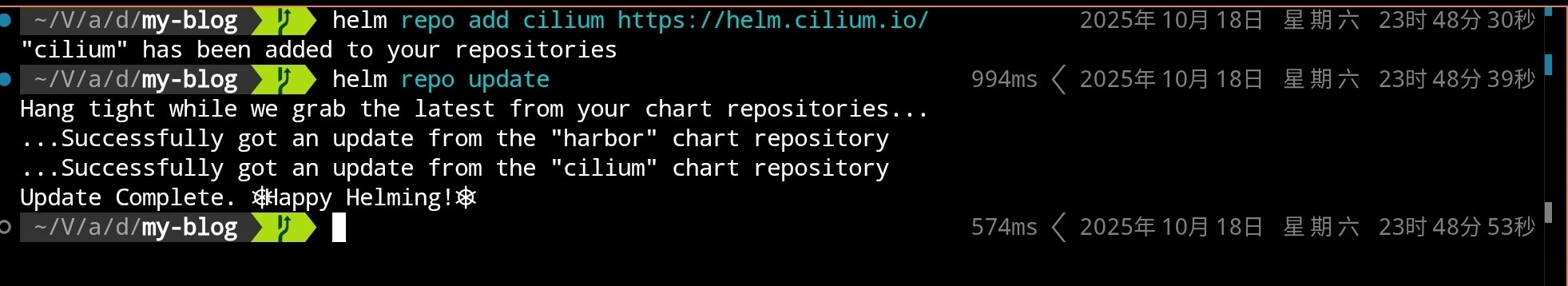

# Add Cilium Helm repository

helm repo add cilium https://helm.cilium.io/

# Update Helm repository

helm repo update

# Install Cilium CNI (single replica, default mode)

helm install cilium cilium/cilium \

--namespace kube-system \

--set operator.replicas=1 \

--set ipam.mode=kubernetes

If kube-proxy is already disabled in the cluster, you can additionally add

--set kubeProxyReplacement=strict. This tutorial keeps the default value for broader compatibility.

After execution, you should see:

Wait for Cilium components to start:

# Check Cilium-related Pod status

kubectl get pods -n kube-system -l k8s-app=cilium

# Check node status (should change from NotReady to Ready)

kubectl get nodes

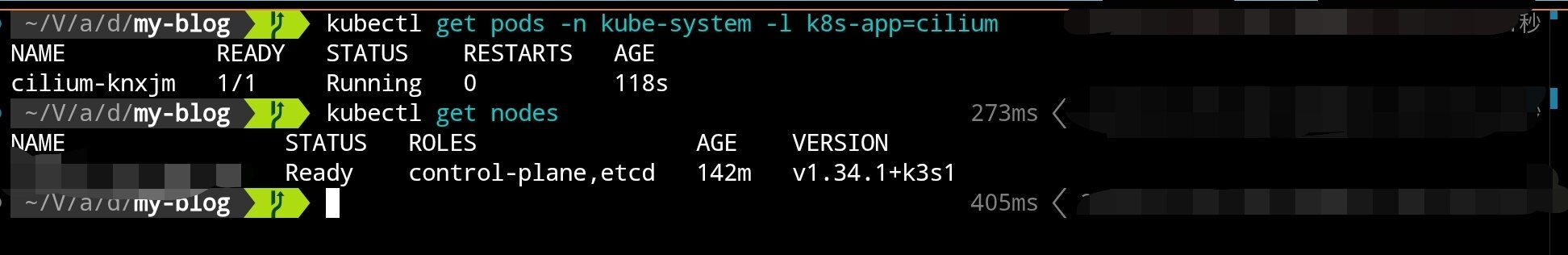

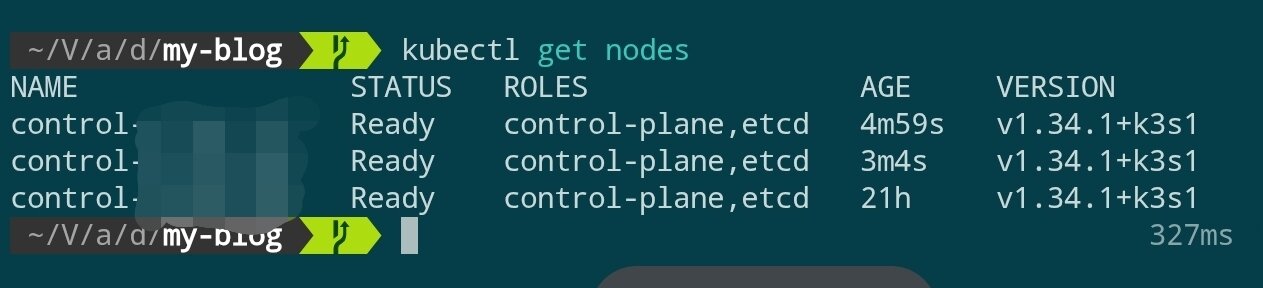

After switching to Cilium, nodes should change from NotReady to Ready. Next, use k3sup to join the other two control nodes.

Scale Control Plane

To achieve high availability, we need at least 3 control nodes. Use the following command to join the second control node:

k3sup join \

--ip <SECOND_NODE_IP> \

--user root \

--ssh-key <KEY_PATH> \

--server-ip <INITIAL_NODE_IP> \

--server \

--k3s-channel latest \

--k3s-extra-args "--disable traefik --disable servicelb --disable local-storage --flannel-backend=none --disable-network-policy"

Join the third control node in the same way:

k3sup join \

--ip <THIRD_NODE_IP> \

--user root \

--ssh-key <KEY_PATH> \

--server-ip <INITIAL_NODE_IP> \

--server \

--k3s-channel latest \

--k3s-extra-args "--disable traefik --disable servicelb --disable local-storage --flannel-backend=none --disable-network-policy"

Wait a few minutes and then check the cluster status:

kubectl get nodes

When all three control nodes are in Ready status, the control plane setup is complete.

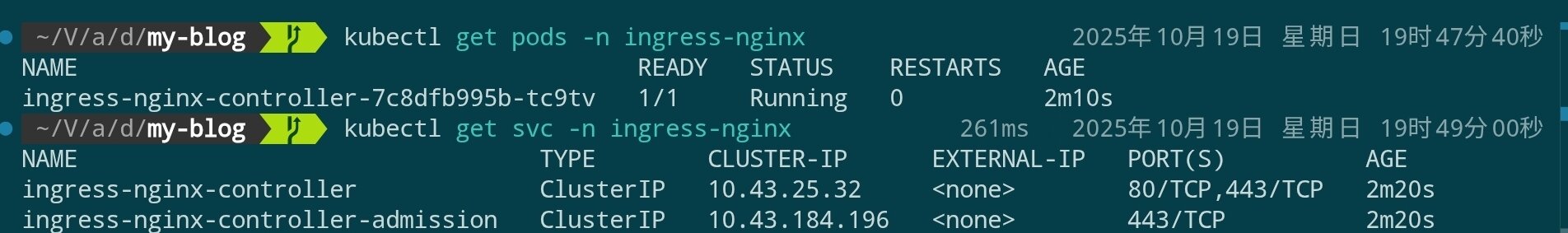

Install Nginx Ingress Controller

Replace k3s's default Traefik with Nginx Ingress Controller:

# Add Nginx Ingress Helm repository

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

# Install Nginx Ingress Controller (may take a while)

helm install ingress-nginx ingress-nginx/ingress-nginx \

--namespace ingress-nginx \

--create-namespace \

--set controller.hostPort.enabled=true \

--set controller.hostPort.ports.http=80 \

--set controller.hostPort.ports.https=443 \

--set controller.service.type=ClusterIP

kubectl get pods -n ingress-nginx

kubectl get svc -n ingress-nginx

Install Longhorn Distributed Storage

Longhorn is a cloud-native distributed block storage system developed by Rancher, providing enterprise-grade features such as high availability, backup, and snapshots. Compared to k3s's default local-path-provisioner, Longhorn supports persistent storage across nodes.

Pre-requisite Dependency Check

Before installing Longhorn, ensure that each node has the necessary dependencies installed; if you want to handle this in bulk with Ansible, refer to the example below:

# Execute on each node (via SSH)

# Check and install open-iscsi

apt update

apt install -y open-iscsi nfs-common

# Enable and start on boot

systemctl enable --now iscsid

systemctl status iscsid

If you want to use Ansible to install dependencies in bulk, refer to the following task snippet:

---

- name: Setup K3s nodes with Longhorn dependencies and CrowdSec

hosts: k3s

become: true

vars:

crowdsec_version: "latest"

tasks:

# ============================================

# Longhorn Prerequisites

# ============================================

- name: Install Longhorn required packages

ansible.builtin.apt:

name:

- open-iscsi # iSCSI support for volume mounting

- nfs-common # NFS support for backup target

- util-linux # Provides nsenter and other utilities

- curl # For downloading and API calls

- jq # JSON processing for Longhorn CLI

state: present

update_cache: true

tags: longhorn

- name: Enable and start iscsid service

ansible.builtin.systemd:

name: iscsid

enabled: true

state: started

tags: longhorn

- name: Load iscsi_tcp kernel module

community.general.modprobe:

name: iscsi_tcp

state: present

tags: longhorn

- name: Ensure iscsi_tcp loads on boot

ansible.builtin.lineinfile:

path: /etc/modules-load.d/iscsi.conf

line: iscsi_tcp

create: true

mode: '0644'

tags: longhorn

- name: Check if multipathd is installed

ansible.builtin.command: which multipathd

register: multipathd_check

failed_when: false

changed_when: false

tags: longhorn

- name: Disable multipathd if installed (conflicts with Longhorn)

ansible.builtin.systemd:

name: multipathd

enabled: false

state: stopped

when: multipathd_check.rc == 0

tags: longhorn

Deploy Longhorn

Install Longhorn using Helm:

# Add Longhorn Helm repository

helm repo add longhorn https://charts.longhorn.io

helm repo update

# Install Longhorn (may take a long time). Note that here we only set single replica to save disk space, please adjust according to your needs

helm install longhorn longhorn/longhorn \

--namespace longhorn-system \

--create-namespace \

--set defaultSettings.defaultDataPath="/var/lib/longhorn" \

--set persistence.defaultClass=true \

--set persistence.defaultClassReplicaCount=1

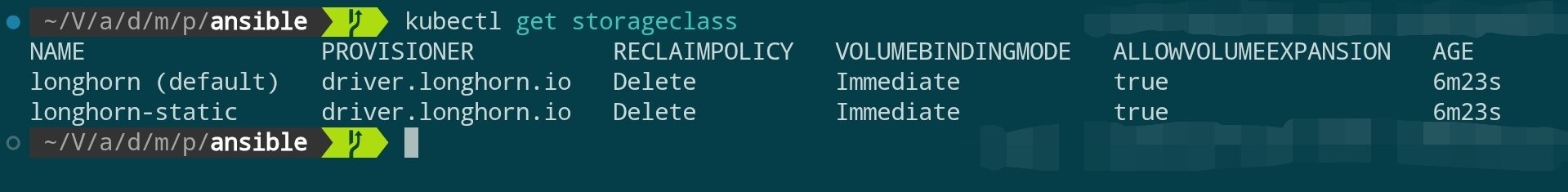

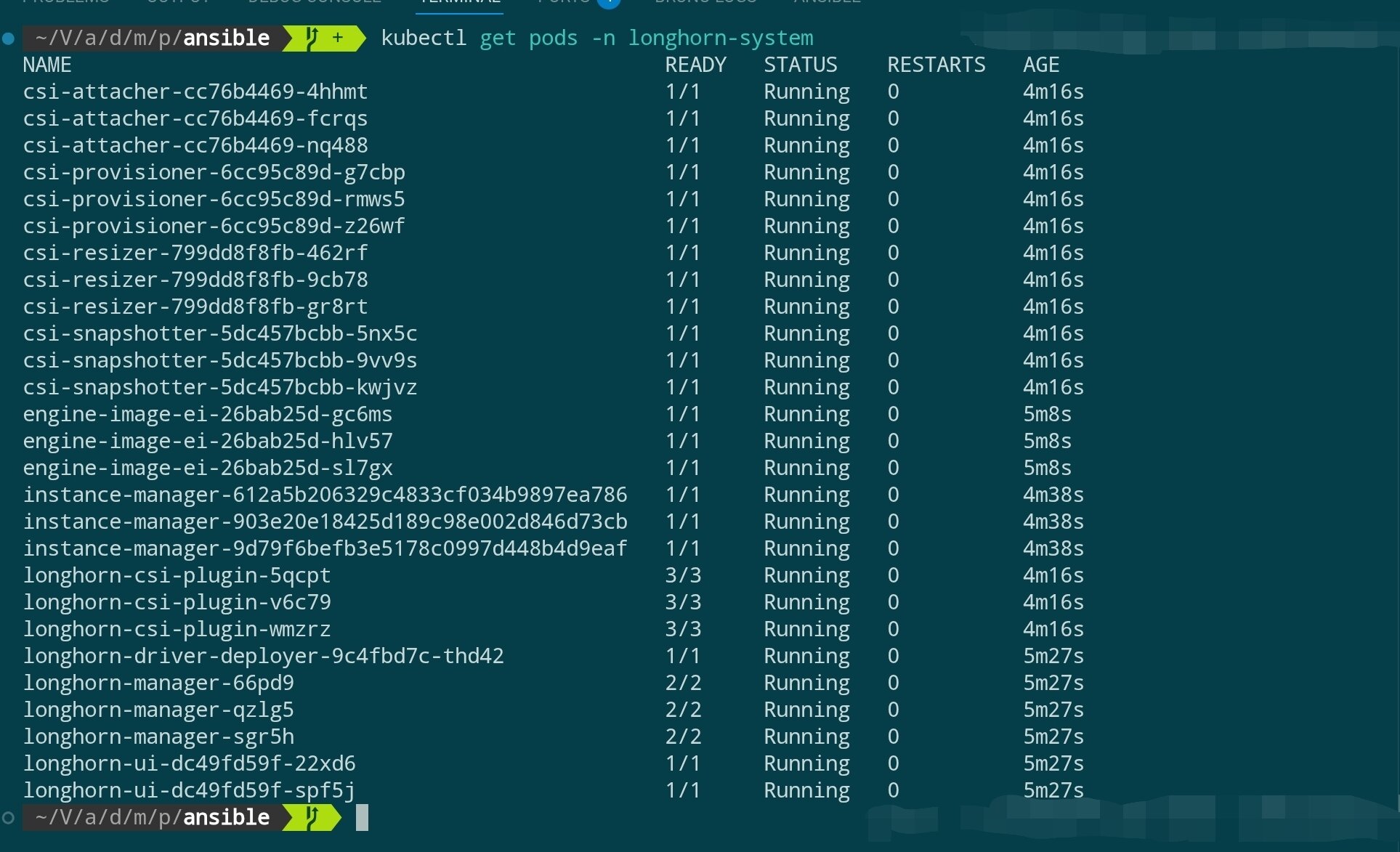

Wait for Longhorn components to start:

# Check Longhorn component status

kubectl get pods -n longhorn-system

# Check StorageClass

kubectl get storageclass

Access Longhorn UI (Optional)

Longhorn provides a Web UI for managing storage volumes. You can temporarily access it through port forwarding:

# Port forward to local machine

kubectl port-forward -n longhorn-system svc/longhorn-frontend 8081:80

# Access http://localhost:8081 in your browser

After viewing, press

Ctrl+Cin the terminal to stop port forwarding and avoid continuously occupying the local port.

Join Agent Nodes

So far, we have built a 3-node high-availability control plane. In production environments, we don't want to run actual applications on control-plane nodes (which would consume resources of core components like API Server and etcd).

Therefore, we need to join dedicated Agent nodes (also called Worker nodes) for running workloads (Pods).

Install Pre-requisite Dependencies

Like control-plane nodes, Agent nodes also need to meet Longhorn's dependencies (if you want these nodes to schedule and store persistent volumes).

Before joining, execute the following commands on all Agent nodes:

# Execute on each Agent node (via SSH)

apt update

apt install -y open-iscsi nfs-common

# Enable and start on boot

systemctl enable --now iscsid

Execute Join Command

The command to add Agent nodes is almost identical to adding control-plane nodes, but with two key differences: don't use the --server flag and no extra parameters.

k3sup join \

--ip <AGENT_NODE_IP> \

--user root \

--ssh-key <KEY_PATH> \

--server-ip <ANY_CONTROL_NODE_IP> \

--k3s-channel latest

Verify Node Status

You can add multiple Agent nodes at once. After adding, wait a few minutes for Cilium and Longhorn components to automatically schedule to new nodes.

Use kubectl to check cluster status:

kubectl get nodes -o wide

You should see the newly joined nodes with <none> in the ROLE column (in k3s, <none> represents the agent/worker role).

At the same time, you can monitor whether Cilium and Longhorn Pods successfully start on new nodes:

# Cilium agent should start on new nodes

kubectl get pods -n kube-system -o wide

# Longhorn instance-manager should also start on new nodes

kubectl get pods -n longhorn-system -o wide

At this point, the cluster now has a highly available control plane and worker nodes for running applications.

Next Steps

- Configure GitOps tools like Argo CD and Flux to standardize cluster declarative management processes.

- Deploy observability components like Prometheus, Loki, and Grafana to improve monitoring and logging systems.

- Use Ansible Playbooks to automate node initialization and component upgrades, reducing subsequent operational costs.